Artificial Intelligence (AI) algorithms are rapidly being integrated into various facets of our lives, from personalized recommendations to autonomous vehicles. However, one of the significant challenges facing AI adoption is the lack of transparency in how these algorithms arrive at their decisions. In many cases, AI models operate as “black boxes,” making it difficult for users to understand why a particular decision was made. This opacity not only hinders trust in AI systems but also raises concerns around bias, accountability, and ethical considerations. To address these challenges, explainable AI techniques have emerged as a critical area of research and development, offering methodologies to enhance the transparency and interpretability of AI models.

Understanding the Importance of Model Transparency

In complex AI systems, such as neural networks and deep learning models, the inner workings of the algorithms can be highly intricate and convoluted. This complexity often results in a lack of transparency, leaving users without a clear understanding of how the AI model arrived at a specific prediction or decision. Model transparency is essential for several reasons, including:

- Building Trust: Transparent AI models help users trust the decisions made by the algorithms, especially in critical applications like healthcare, finance, and law enforcement.

- Detecting Bias: Transparent AI models enable stakeholders to identify and mitigate biases that may be present in the data or the algorithms themselves.

- Ensuring Accountability: Transparent AI systems allow for better accountability, enabling users to explain and justify the decisions made by AI models.

Explainable AI Techniques for Enhancing Transparency

- Feature Importance Analysis: This technique involves identifying the most influential features in the AI model’s decision-making process. By highlighting the key factors that drive a particular prediction, users can gain insights into why the model made a specific decision.

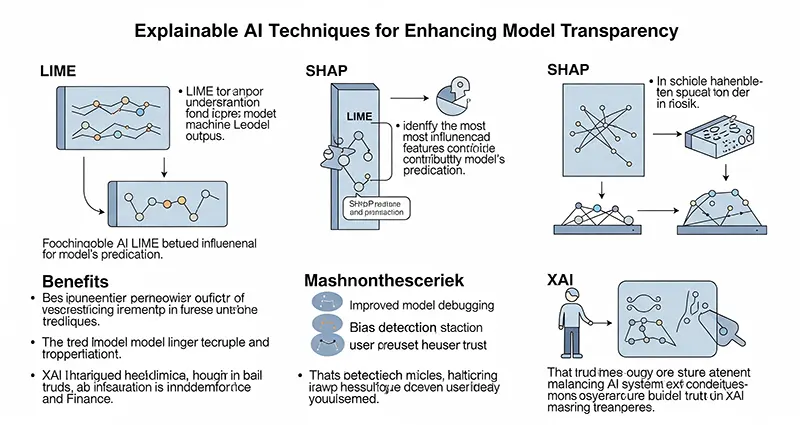

- Local Interpretable Model-Agnostic Explanations (LIME): LIME is a method that provides interpretable explanations for individual predictions made by complex AI models. By generating locally faithful explanations for each prediction, LIME helps users understand the factors contributing to a specific outcome.

- SHAP (SHapley Additive exPlanations): SHAP is a versatile tool for explaining the output of machine learning models. By quantifying the impact of each feature on the model’s predictions, SHAP offers a comprehensive understanding of how different variables influence the AI model’s decisions.

- Decision Trees and Rule-Based Models: Decision trees and rule-based models are inherently interpretable AI algorithms that provide a clear and structured representation of how decisions are made. By visualizing the decision-making process through a series of rules or branches, users can easily interpret the logic behind the AI model’s predictions.

- Layer-wise Relevance Propagation (LRP): LRP is a technique that assigns relevance scores to each neuron in a deep neural network, allowing users to understand which parts of the input data are most relevant to the model’s predictions. This method helps unravel the black-box nature of deep learning models and enhances their interpretability.

The Future of Explainable AI

As AI continues to evolve and permeate various industries, the development of explainable AI techniques will play a crucial role in ensuring the responsible and ethical deployment of AI systems. By enhancing model transparency and interpretability, explainable AI techniques empower users to understand, trust, and effectively utilize AI-driven insights. As researchers and practitioners continue to advance the field of explainable AI, we can expect more innovative methodologies and tools to enable transparent, accountable, and bias-free AI applications in the future.

Explainable AI techniques are instrumental in enhancing the transparency and interpretability of AI models, addressing the inherent challenges of opacity and complexity in modern AI systems. By embracing these methodologies, organizations and researchers can foster trust, detect biases, ensure accountability, and pave the way for a more ethical and responsible integration of AI technologies in society.