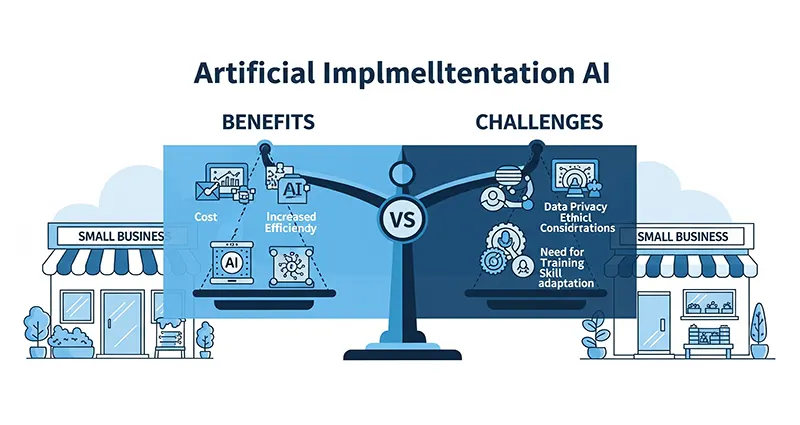

Benefits and Challenges of Implementing AI in Small Businesses

Artificial Intelligence (AI) has emerged as a transformative technology that offers numerous benefits for businesses of all sizes, including small enterprises. While AI can streamline operations, improve efficiency, and drive growth, it also presents unique challenges that small businesses need to navigate. Let’s explore the benefits and challenges of implementing AI in small businesses.

Benefits of Implementing AI in Small Businesses:

1. Increased Efficiency:

AI-powered tools and systems can automate repetitive tasks, allowing small businesses to operate more efficiently and free up employees to focus on strategic initiatives.

2. Enhanced Decision-Making:

AI algorithms can analyze vast amounts of data quickly and provide valuable insights that can inform decision-making processes, helping small businesses make data-driven decisions.

3. Improved Customer Experience:

AI-powered chatbots and virtual assistants can provide round-the-clock customer support, personalize interactions, and enhance the overall customer experience for small businesses.

4. Cost Savings:

By automating tasks and improving operational efficiency, … Read the rest